Artificial-General-Intelligence

Brief Overview of AGI field

View the Project on GitHub abhinav153/Artificial-General-Intelligence

What is AGS?

This field looks at the intersection of Artificial intelligence, neuroscience and philosophy

Here some the questions it tries to answer

- What is the nature of consciousness?

- What is intelligence ?

What is intelligence ?

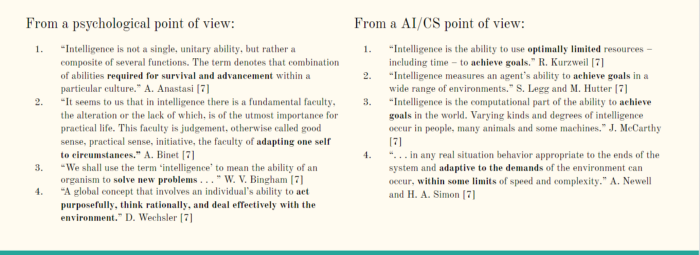

There is’nt an agreed upon defintion of what intelligence,multiple viewpoints have been put forward as to what intelligence which are summuarised in the page below [1]

Traditionally, machine learning (ML) is a subset of AI, while AI is a subset of AGI (or, depending on who’s asking, it is a way to achieve AGI). AGI is the broadest class containing both AI and ML, and traditional or colloquial AI is generally referred to as “narrow AI” or “weak AI”: the development of systems that dealt intelligently with a single, narrow domain

The problem is that machines now are really good at narrow tasks, as in AI has excelled in specific domains like playing Go, spam detection, and Spotify playlist recommendations etc . However, computers now lack the ability to generalize knowledge to other domains. This is the heart of the issue,where the goal is attain

“general intelligence”

What is General Intelligence ?[2]

Qualitatively speaking, though,there is broad agreement in the AGI community on some key features of general intelligence:

• General intelligence involves the ability to achieve a variety of goals, and carry out a variety

of tasks, in a variety of different contexts and environments.

• A generally intelligent system should be able to handle problems and situations quite different

from those anticipated by its creators.

• A generally intelligent system should be good at generalizing the knowledge it’s gained, so

as to transfer this knowledge from one problem or context to others.

• Arbitrarily general intelligence is not possible given realistic resource constraints.

• Real-world systems may display varying degrees of limited generality, but are inevitably

going to be a lot more efficient at learning some sorts of things than others; and for any

given real-world system, there will be some learning tasks on which it is unacceptably slow.

So real-world general intelligences are inevitably somewhat biased toward certain sorts of

goals and environments.

• Humans display a higher level of general intelligence than existing AI programs do, and

apparently also a higher level than other animals.

• It seems quite unlikely that humans happen to manifest a maximal level of general intelli-

gence, even relative to the goals and environment for which they have been evolutionarily

adapted.

Core AGI hypothesis

Another point broadly shared in the AGI community is confidence in what is called the “core AGI hypothesis,”

The creation and study of synthetic intelligences with sufficiently broad

(e.g. human-level) scope and strong generalization capability, is at bottom qualitatively different

from the creation and study of synthetic intelligences with significantly narrower scope and weaker

generalization capability.

Approaches in AGI[3]

After the high level introduction to the scope of AGI a succinct categorization of the mainstream AGI approaches is presented.

Symbolic

The roots of the symbolic approach to AGI reach back to the traditional AI field. The guiding principle for all symbolic systems is the belief that the mind exists mainly to manipulate symbols that represent aspects of the world or themselves. This belief is called the physical symbol system hypothesis.

Emergentist

The Emergentist approach to AGI takes the view that higher level, more abstract symbolic processing, arises (or emerges) naturally from lower level “subsymbolic” dynamics. As an example, consider the classic multilayer neural network which is in most ubiquitous practice today. The view here is that a more thorough understanding of the fundamental components of the brain and their interplay may lead to a higher level understanding of general intelligence as a whole.

Computational Neuroscience

As it sounds, computational neuroscience is an approach to exploring the principles of neuroscience using computational models and simulations. This approach to AGI falls under the emergentist category. If a robust model of the human brain can be developed it stands to reason the we may be able to glean insight into what components of the model give rise to higher level general intelligence.

Developmental Robotics

Infants are the ultimate scientists. They use all of their senses to interact with their environment and over time create a model of their perceived reality. It is argued that general intelligence arises from “the brain’s” constant interaction with its surroundings and environment. Developmental robotics attempts to recreate this process.

Hybrid

In recent years AGI researchers have begun integrating both symbolic and emergentist approaches. The motivation is that, if designed correctly, each system’s strengths can ameliorate the other’s weaknesses. The concept of “cognitive synergy” captures this principle. It argues that higher level AGI emerges as a result of harmonious interactions from multiple components.

Universalist

The univeralist approach leverages a principle employed by many creative designers and inventors. Instead of coming up with an idea that satisfies all of a problems inherent limitations, one “dreams big” and develops elaborate, even unrealistic ideas, and later simplifies them to fit within the confines of the proposed problem. In regard to AGI, the so called universalist approach, aims at developing ideal, perfect, or unrealistic models of general intelligence. These models and algorithms may require incredible power, even infinite power to be employed. In summary universalists might argue that one should not limit their creativity by any imposed constraints.

The concept of minds—their nature, their implementation, their applications, etc.—are of huge interests to AGI researchers, and even anyone remotely interested in AI; arguably, this is the entire job of an AI/AGI researcher: creating artificial minds

Specifically, we are introducing and discussing these questions: What is a mind? Where do minds come from? How could we test for presence of a mind in a computer/artificial agent? Could a machine mind ever truly understand semantics?

What is a mind ?[4]

SMOM(an attempt to solidify what minds must do in order to be considered a mind) : A mind is a functional entity that can think, and thus support intelligent behavior. Humans possess minds, as do many other animals. In natural systems such as these, minds are implemented through brains, one particular class of physical device. However, a key foundational hypothesis in artificial intelligence is that minds are computational entities of a special sort — that is, cognitive systems — that can be implemented through a diversity of physical devices (a concept lately reframed as substrate independence [Bostrom 2003]), whether natural brains, traditional general- purpose computers, or other sufficiently functional forms of hardware or wetware.

So, minds are a sort of abstraction — a “bundle of mental processes” if you will, or a type of “software” that, according the the above, is independent of the hardware it is implemented on. That is, minds could be realized in other mediums besides brains (this is often referred to as “multiple realizability” in philosophy of mind).

In essence, what is needed to be a mind according to the paper is [4]:

-A main module of working memory

-A module of procedural long term memory

-A module of declarative memory

-A perception interface

-A motor interface

-Working Memory serving as a middleman that connects to PLTM, DLTM, and P/M interfaces.

References

- Legg, Shane, and Marcus Hutter. “A collection of definitions of intelligence.” Frontiers in Artificial Intelligence and applications157 (2007): 17

- Goertzel, Ben. “Artificial general intelligence: concept, state of the art, and future prospects.” Journal of Artificial General Intelligence 5.1 (2014): 1–48.

- http://mathnathan.com/2014/07/artificial-general-intelligence-concept-state-of-the-art-and-future-prospects/

- Laird, John E., Christian Lebiere, and Paul S. Rosenbloom. “A standard model of the mind: Toward a common computational framework across artificial intelligence, cognitive science, neuroscience, and robotics.” Ai Magazine 38.4 (2017): 13–26.